RNA: Relightable Neural Assets

Relightable Neural Assets, a neural representation for 3D assets with complex shading that supports

full relightability and full integration into existing production renderers for Monte Carlo path tracing.

Abstract

High-fidelity 3D assets with materials composed of fibers (including hair), complex layered material shaders, or fine scattering geometry are ubiquitous in high-end realistic rendering applications. Rendering such models is computationally expensive due to heavy shaders and long scattering paths. Moreover, implementing the shading and scattering models is non-trivial and has to be done not only in the 3D content authoring software (which is necessarily complex), but also in all downstream rendering solutions. For example, web and mobile viewers for complex 3D assets are desirable, but frequently cannot support the full shading complexity allowed by the authoring application.

Our goal is to design a neural representation for 3D assets with complex shading that supports full relightability and full integration into existing renderers. We provide an end-to-end shading solution at the first intersection of a ray with the underlying geometry. All shading and scattering is precomputed and included in the neural asset; no multiple scattering paths need to be traced, and no complex shading models need to be implemented to render our assets, beyond a single neural architecture.

We combine an MLP decoder with a feature grid. Shading consists of querying a feature vector, followed by an MLP evaluation producing the final reflectance value. Our method provides high-fidelity shading, close to the ground-truth Monte Carlo estimate even at close-up views. We believe our neural assets could be used in practical renderers, providing significant speed-ups and simplifying renderer implementations.

Method

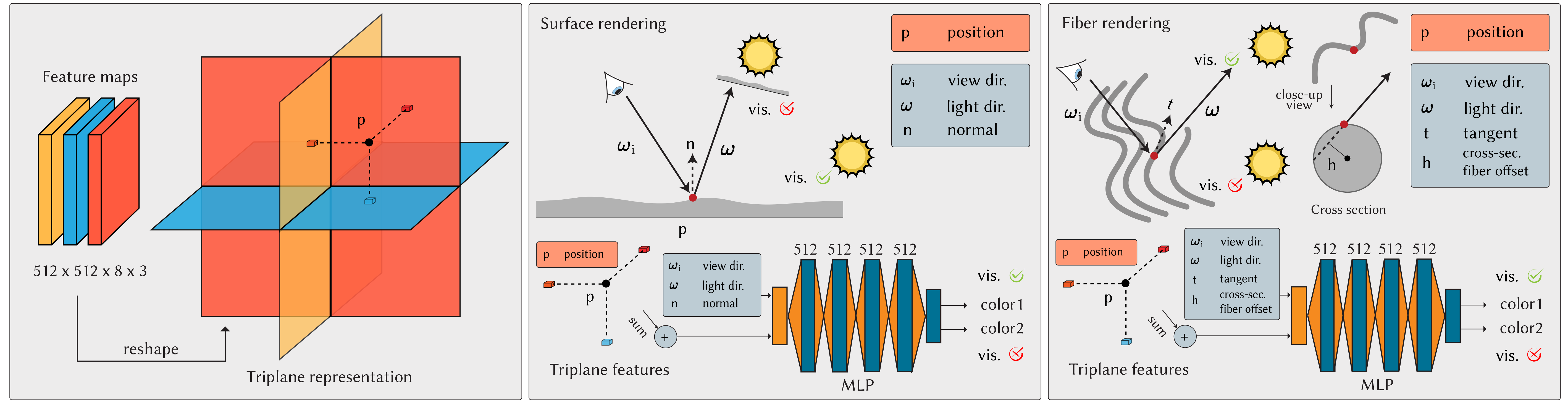

Overview of Relightable Neural Assets representation. On the left, we illustrate our triplane representation, consisting of XY, XZ and YZ planes each with 8 feature channels and a default resolution of 512 by 512. The feature vectors queried from the triplane representation are summed and passed into an MLP, along with additional properties. We show two configuration variants of relightable neural asset pipelines designed for surface rendering (middle) and fiber rendering (right), respectively. The main difference is that surfaces use a normal input, while fibers use tangent and cross-section offset. Both variants output two colors, one of which will be picked according to the visibility at render time.

IBL relighting

IBL relighting results on surface assets. We render our model under four different IBL environments with shadow-catching ground plane on surface assets, namely the translucent lego and a basket of flowers, both featuring subsurface scattering. The lighting conditions vary from sharp outdoor sunlight to indoor office light, demonstrating our model’s ability to faithfully react to the illuminations for relighting.

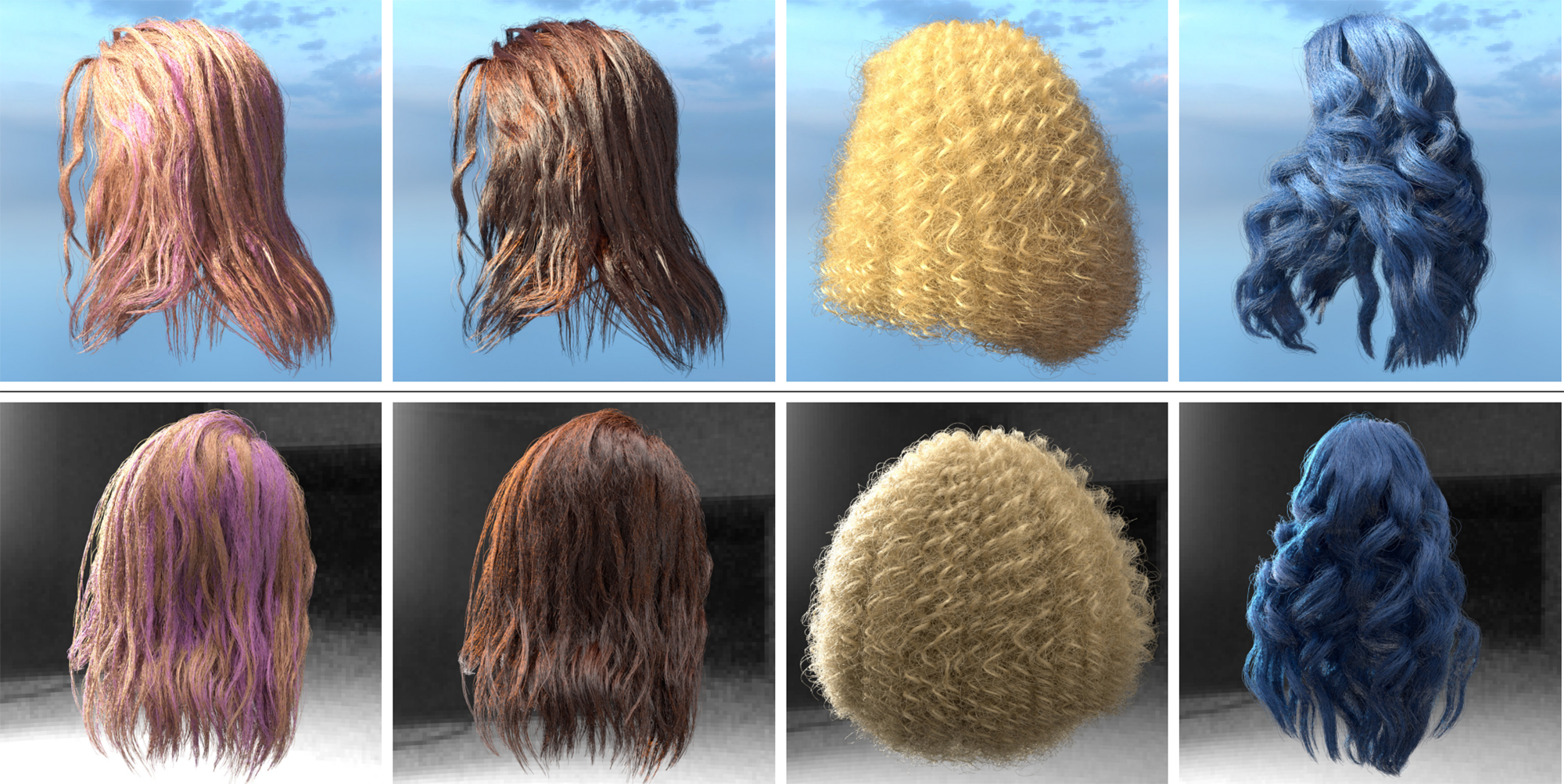

IBL relighting results on fiber assets. Our model is rendered under precise IBL lighting environments, including sunlight and studio light, using four different hair assets with complex scattering effects. It successfully captures the texture of specular glinty highlights and the soft diffusion-like characteristics due to multiple fiber interactions, maintaining the lifelike appearance of individual hair strands and photorealism of the hair appearance, demonstrating reasonable visual accuracy while responding to various illuminations.

Production renderer integration

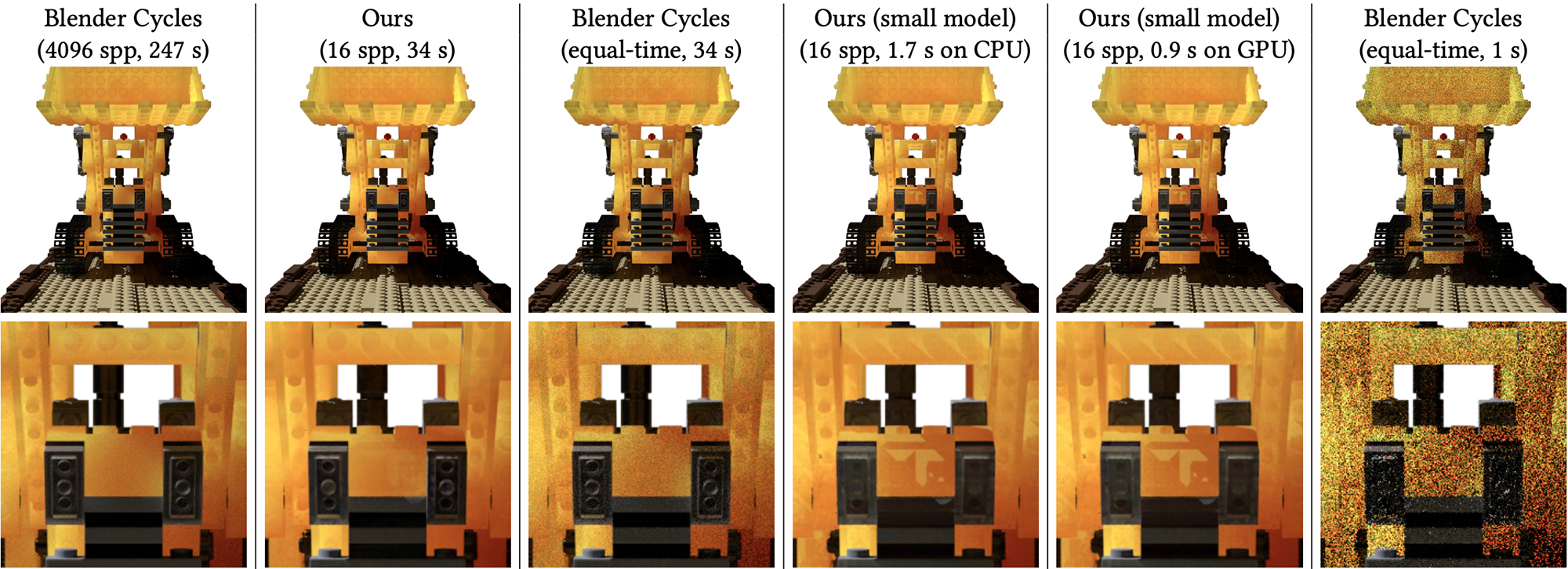

Path tracer integration on surface-based asset. We integrate surface models into a production path tracer on both CPU and GPU rendering. Our model significantly simplfies the shader implementation and improves the rendering performance. In contrast, blender path tracing exhibits severe Monte Carlo noise at equal rendering time budget.

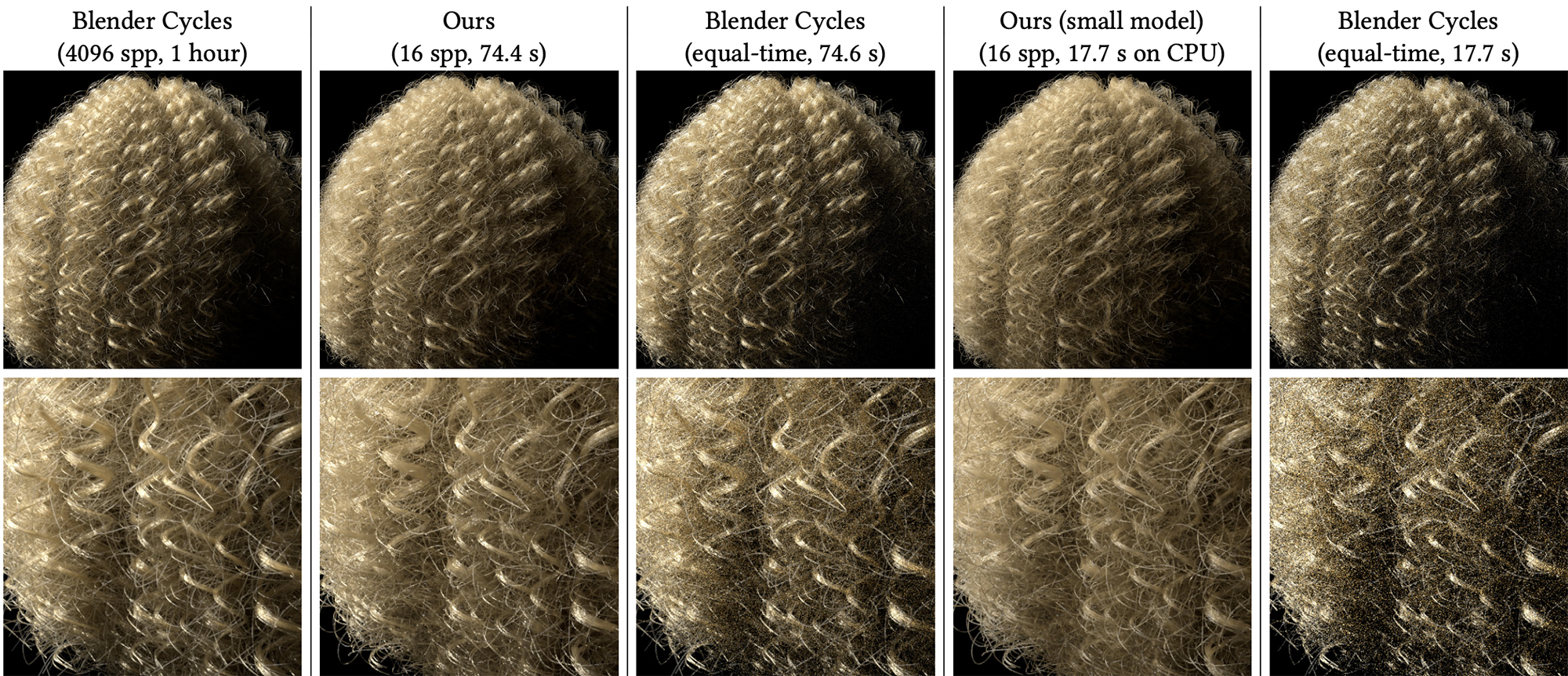

Path tracer integration on fiber-based asset. We integrate hair models into a production path tracer on CPU rendering. Our model significantly simplfies the shader implementation and improves the rendering performance. In contrast, blender path tracing exhibits severe Monte Carlo noise at equal rendering time budget.

Scene from the teaser path traced for global illumination under an outdoor sun-sky lighting, with the blue and blonde hair wigs, subsurface scattering lego and a basket of flowers rendered using our relightable neural 3D asset representation.

Interactive Captures

(Small Model)

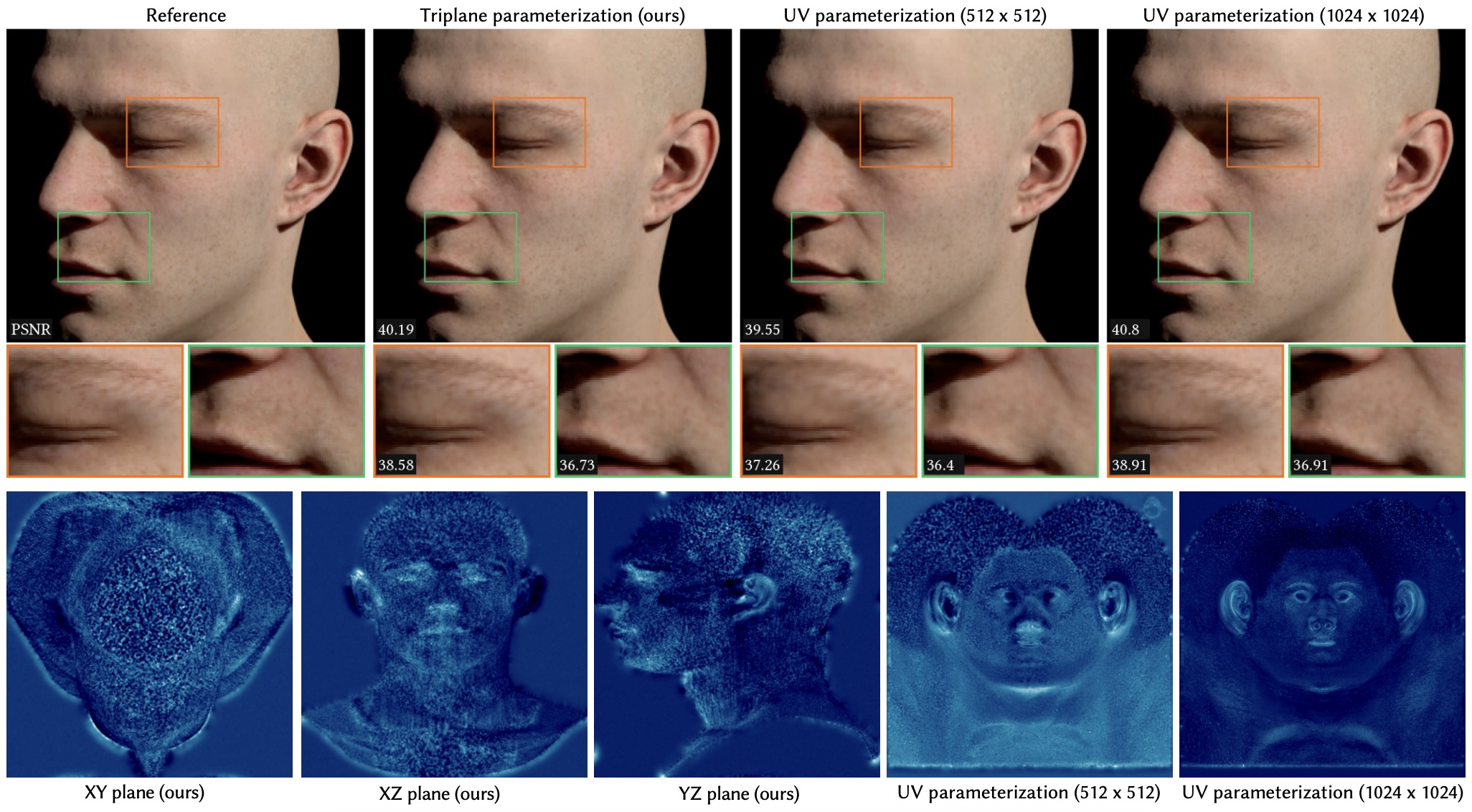

Triplane vs. UV parameterization

Ablation on the triplane vs. UV parameterization. We examine triplane and UV parameterization on the head asset. UV parameterization at 512 × 512 resolution results in blurred details, becoming sharper only at 1024 × 1024. Conversely, the triplane representation provides satisfactory detail at 512 × 512.

BibTeX

@article{mullia2024rna,

author = {Mullia, Krishna and Luan, Fujun and Sun, Xin and Ha\v{s}an, Milo\v{s}},

title = {RNA: Relightable Neural Assets},

year = {2024},

issue_date = {February 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {44},

number = {1},

issn = {0730-0301},

url = {https://doi.org/10.1145/3695866},

doi = {10.1145/3695866},

journal = {ACM Trans. Graph.},

month = oct,

articleno = {2},

numpages = {19},

keywords = {Rendering, raytracing, neural rendering, global illumination, relightable neural assets}

}